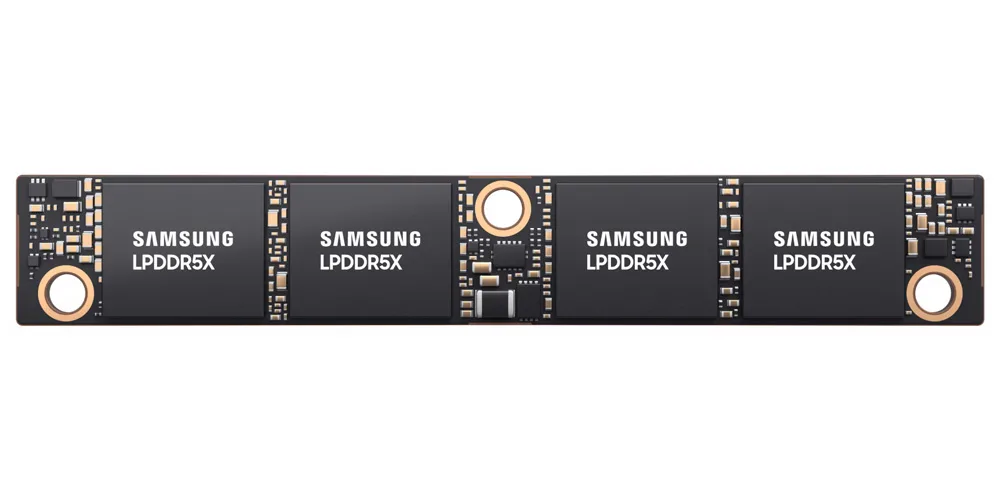

Samsung has announced the development of SOCAMM2—short for Small Outline Compression Attached Memory Module—a new LPDDR5X-based memory solution specifically engineered for the high-efficiency demands of AI data centers, and is currently being sampled by its prospective customers.

The move marks a significant shift in server architecture, bringing Low-Power Double Data Rate (LPDDR) memory—traditionally found in laptops and mobile devices—into the server environment to address the rising power costs of AI inference.

Unlike traditional LPDDR memories that were soldered directly to the motherboard, SOCAMM2 utilizes a compression-attached, detachable design that allows for modularity without sacrificing the performance benefits of LPDDR. SOCAMM2 promises more than twice the bandwidth of traditional DDR-based RDIMMs and consumes 55% less power than the RDIMM counterparts. It also gains an upper hand with its horizontal orientation that allows improved airflow and enables flexible placement of heatsinks and liquid cooling components.

The development of SOCAMM2 modules is a clear response to address energy efficiency, which has become the primary bottleneck for data centers as they move from model training to large-scale inferencing.

Samsung has also confirmed that Nvidia is helping them to optimize SOCAMM2 for next-generation accelerated infrastructure and will be compatible Nvidia’s high-performance AI chips.

Leave a Reply